Group in Charge: PI Takayuki KANDA group (JP)

Kyoto University is working on machine learning of social interaction behaviors in the retail store scenario. This is an approach in which a robot autonomously learns how to interact using observation data obtained from human-human interactions. The original proposed plan for this work package consisted of two parts. First, was learning interactions based on observation of humans. The second part of the original proposed plan for this work package was connecting interaction learning to a knowledge base.

Towards these goals, we took the following approach.

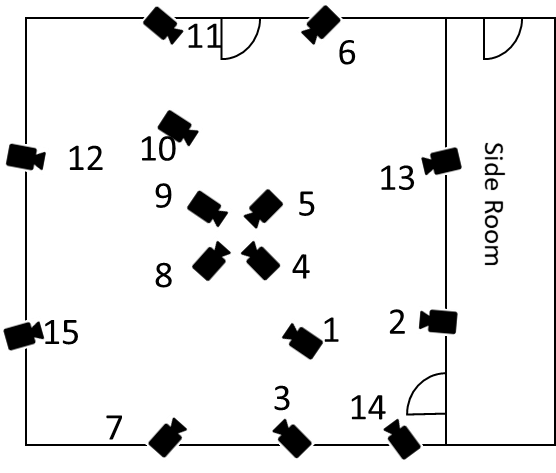

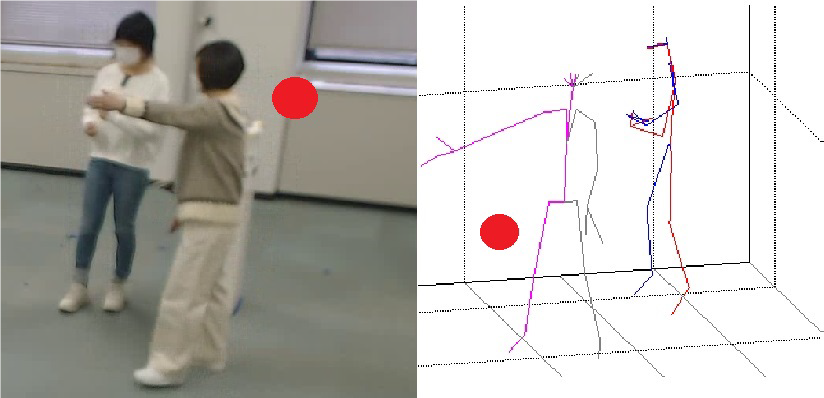

Building an in-lab data collection environment

To train a robot to perform social interactions, data containing examples of interaction behaviors is first required. Therefore, we prepared a data collection system to record social interactions among humans. Since it is difficult to collect data from actual retail stores, we first aimed to collect role-played interactions in the laboratory. To collect this data, we installed 15 depth sensors (Kinect Azure) in a 7 x 8 m room likened to a store environment (see Figure wp3.1). By combining multiple sensors, it is possible to continuously track the whole-body movements of all people in a space. Furthermore, we also prepared cameras and microphones to record video and audio data. Speech-to-text software was also prepared to automatically transcribe the speech of role-play participants. In this way, important information related to the social interactions can be collected and later used to train a robot.

Identifying typical interactions through interviews

In order to collect human-to-human interaction data in the store clerk scenario, it is first important to understand what kind of actions are taking place in the store. Therefore, we conducted interviews with 12 people who had experience working in retail stores. They talked about working in the store and interacting with other store employees and customers. As a result, we were able to broadly categorize the tasks into 11 types, including customer service and inventory management. Furthermore, we clarified the types of interactions they often have with customers and other store employees.

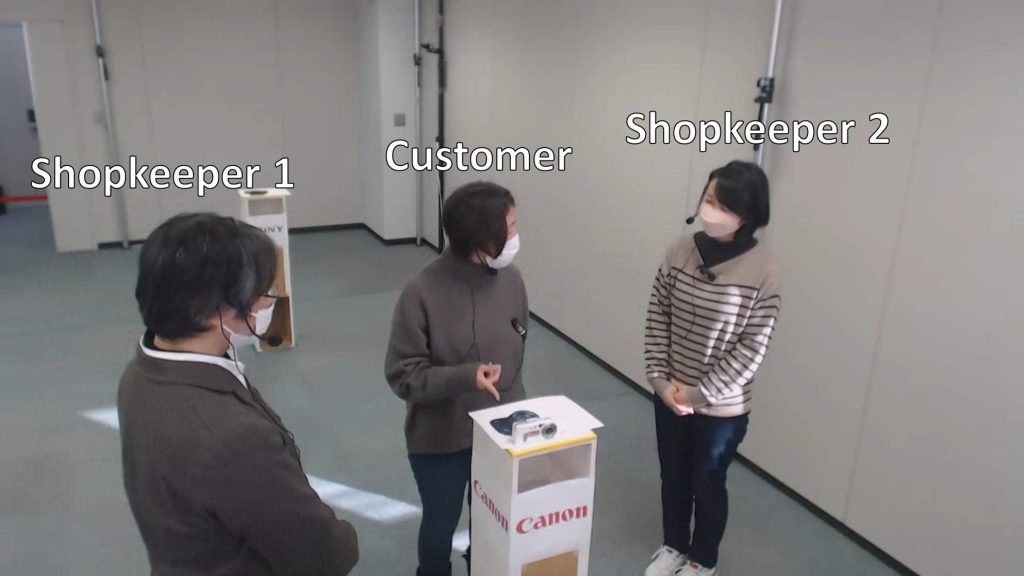

Collecting data on interactions between people

Based on the results of retail store staff interviews, we designed interaction scenarios to role-play typical interactions between store staff and customers. We set up a 7 x 8 m indoor space that resembled a camera store, had participants role-play interactions between store staff and customers, and recorded their speech and actions. In each data collection session, two clerks (two of three repeat participants with service experience) and one customer each role-played multiple scenarios (Figure wp3.2). Participants’ movements were tracked over time using a Kinect Azure sensor array. Speech was also recorded using each participant’s headset microphone, and utterances were transcribed using automatic speech recognition software.

In order to elicit a variety of behaviors and interactions, the roles of the staff (a manager who can do all of the store’s tasks and a new clerk who can only do some) and the roles of customers (portrait photographers, landscape photographers, etc.) were assigned. To enable learning of social interaction behavior within time and resource constraints, we specified camera attributes (price, weight, color, resolution, camera-specific features) that customers should mainly talk about. There was a total of 28 participants (25 customer participants, 3 store clerk participants), who recorded 714 sessions (approximately 40 hours of data).

In addition to the above data collection, a second data collection was conducted to focus on collection of ‘interactive motions,’ such as gestures and body postures (see Figure wp3.4). Using a similar camera store scenario, we recruited participants to perform one-to-one customer-shopkeeper interactions. Three participants, who had prior experience in customer service, took turns role-playing as shopkeepers, and we recruited 30 customer participants (aged from 19 to 60, 13 male and 17 female). Finally, 394 trials of interaction data (mean 8 min., SD 3.5 min.; 3170 min. total) were collected.

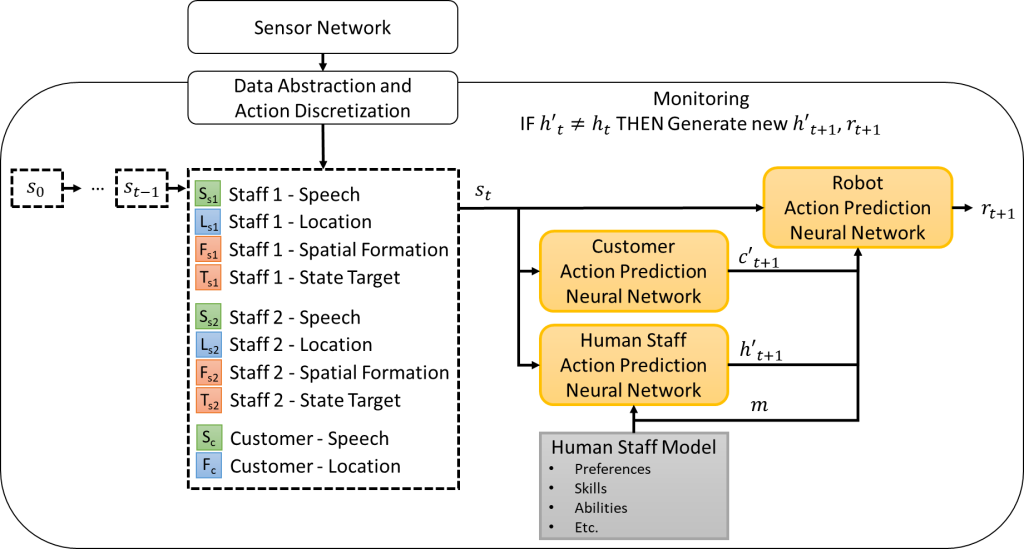

Designing a learning system for social interaction with both staff members and customers

The purpose of the learning system is to learn the social interaction behavior of store clerks from examples of interactions between humans and store clerks, so that the robot can function as a store clerk in real human interactions. Based on previous research, we conducted a preliminary design of the entire system to make the clerk robot function during execution. Its configuration diagram is shown in \autoref{learningsystem}. The system predicts the behavior of customers and fellow employees, and uses that information to determine the next appropriate action for the robot to take. After collecting a large amount of data from the human-human interactions described above, a learning system is trained based on that data.

Achievement: A summary of the results of the retail store staff interviews, including a description of types of tasks performed and ways in which staff and customers interact, as well as proposed architecture to learn to imitate such interaction behaviors is presented in the paper below. Additionally, we are planning one publication to present the dataset and social learning results of the interaction scenario with multiple staff.

M Doering, D Brščić, T Kanda. Learning Social Robot Behaviors for Interacting with Staff and Customers. Workshop on Machine Learning in Human-Robot Collaboration: Bridging the Gap, 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2022), March 2022.

Data processing for learning store clerk model

In order to make the above data usable for machine learning, we worked on data preprocessing to reduce the dimensionality of the data and reduce the effects of sensor noise. This includes cleaning up tracking data from spurious measurements so that each participant can be tracked continuously throughout the duration of each session. Then, an optimization algorithm was applied to divide the trajectory into « moving » and « stopping » segments and clustering the stopped segments to find common stopping positions within the room. We also performed action segmentation to discretize the data into input-output pairs that can be used to train the learning system. Each action consists of an utterance, a current position, a movement target, and a movement starting point. The multiple shopkeeper dataset contains approximately 10,000 actions by manager employees, 13,000 actions by new employees, and 14,000 actions by customers.

Learning an interaction model including pointing

Until now, imitation learning methods for interactive social behaviors have been limited to speech and locomotion, and have not included other interactive motions such as gestures and body posture. For example, pointing to an object is a gesture that humans often use during interactions (see Figure wp3.4). Analyzing the dataset (described above), we found that gestures were used very frequently during interactions, in fact, store employees used pointing gestures about 8% of the time during interactions. Therefore, to correctly imitate pointing gestures, we used an existing model of pointing to automatically detect pointing gestures and novel heat-map-based method to automatically find the common targets of pointing gestures. Further more, interactive motions from representative utterances which the robot shopkeeper must speak were mapped to the robot’s body model. Thus, we utilized the human-human interaction data described above to train a system that can imitate appropriate interaction behaviors, including interactive motions.

Achievement: The preliminary work on learning 'interactive motions' within the framework of data-driven imitation learning are presented in the below workshop paper:

Y Jiang, M Doering, T Kanda. Towards Imitation Learning of Human Interactive Motion. Workshop on Artificial Intelligence for Social Robots Interacting with Humans in the Real World (intellect4hri), 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), October 2022.

Detection and prevention of mistakes during interactions

While data-based imitation learning can effectively generate robot behaviors for human-robot interaction, it is difficult to completely avoid interaction errors that cause interaction breakdown (see Figure wp3.5). Moreover, such interaction errors are not included in the collected datasets as they do not occur often in human-human interactions, and the generated robot behavior models are not equipped to effectively correct errors when they occur. There is no learned behavior to prevent this from happening. In order to achieve more robust error handling, we analyzed the types of errors that occur in social imitation learning for human-robot interaction. In this study, based on previous research, we focused on two robot behavior generation systems: one with data abstraction and one without data abstraction. We classified the interaction errors that frequently occur in these systems into categories and summarized the interaction patterns obtained as a result. As a result, we found that many of these errors degrade the quality of the interaction and sometimes cause human frustration and confusion. Therefore, we are researching methods to automatically detect such interaction errors and enable robots to recover autonomously.

Achievement: The methods developed for automatic detection of robot errors within the frame work of data-driven imitation learning and the experimental results from using these methods are presented in:

J Ravishankar, M Doering, T Kanda. Analysis of Robot Errors in Social Imitation Learning. Workshop on Artificial Intelligence for Social Robots Interacting with Humans in the Real World (intellect4hri), 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), October 2022.

J Ravishankar, M Doering, T Kanda. Zero-Shot Learning to Enable Error Awareness in Data-Driven HRI. 19th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2024). March 2024.

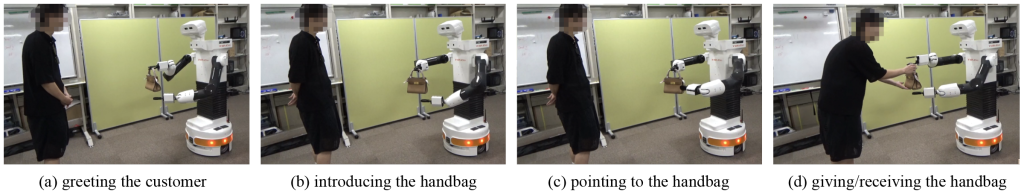

Modeling of robot shopkeeper idle hand behavior

In retail settings, a robot’s one-handed manipulation of objects can come across as thoughtless and impolite, thus creating a negative customer experience. To solve this problem, we first observed how human shopkeepers interact with customers, specifically focusing on their hand movements during object manipulation. From the observation and analysis of shopkeepers’ hand movements, we identified an essential element of their idle hand movements: « support » provided by the idle hand as the primary hand manipulates an object. Based on this observation, we proposed a model that coordinates the movements of a robot’s idle hand with its primary task-engaged hand, emphasizing its supportive behaviors (see Figure wp3.6). In a within-subjects study, 20 participants interacted with robot shopkeepers under different conditions to assess the impact of incorporating support behavior with the idle hand. The results show that the proposed model significantly outperforms a baseline in terms of politeness and competence, suggesting enhanced object-based interactions between the robot shopkeepers and customers.

Achievement: The proposed model of support hand behavior, which can potentially be used with robots trained using social imitation learning, is presented in:

X Pan, M Doering, T Kanda. What is your other hand doing, robot? A model of behavior for shopkeeper robot's idle hand. 19th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2024). March 2024.