Group in Charge: PI Beetz group (GER), Co-PI Aurélie Clodic group (FR)

The University of Bremen is working towards creating a framework for cognition-enabled robots working in everyday activities. The robots use the cognitive robot abstract machine CRAM as their cognitive architecture [Beetz2010], which allows to parameterise general actions to execute specific task variations, on different robots and in changing environments.

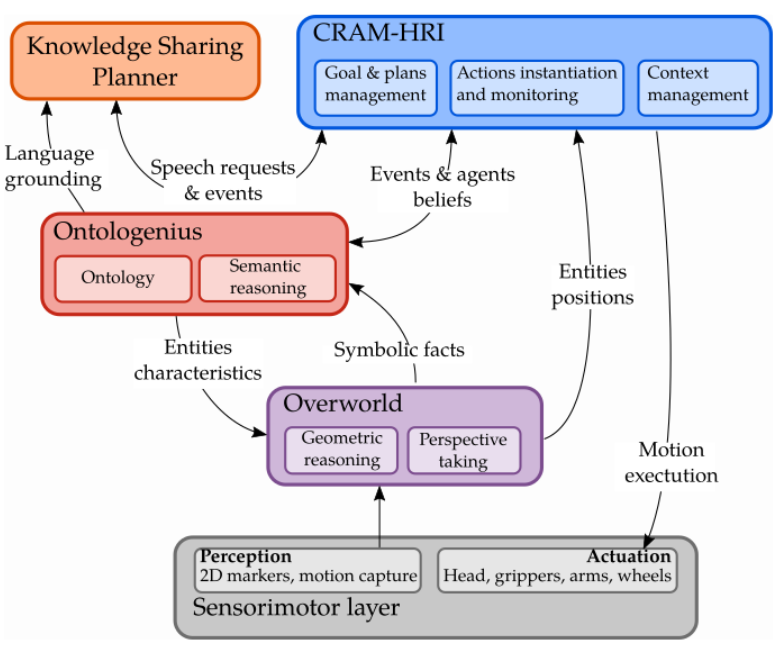

We have developed a CRAM-HRI framework to enable efficient communication between human and robot agents. It is the result of a cooperation between University of Bremen and LAAS. Additionally, we have extended the existing perception and knowledge frameworks to model different belief states as will be explained in the following.

CRAM-HRI A Cognition-enabled Framework for Human-Robot Interaction

The work conducted on this topic aimed to provide a high-level multi-agent interaction framework for improving human-robot interaction by serving two main objectives. The first objective is to establish a shared and common understanding of a mutual belief. Such belief is constructed by grounding the communicated object in physical objects within the world. The second objective is to negotiate and disambiguate the communicated object in relation to comparable objects. CRAM-HRI is built upon an existing Cognitive Robot Abstract Machine (from UniHB) that utilizes DACOBOT architecture components (from LAAS). The capabilities of the CRAM-HRI system have been assessed as a proof-of-concept in the context of the « director task » (DT), which originates from psychological research designed to examine the utilization of Theory-of-Mind in referential communication [Sarthou2021]. This test helps evaluate the system’s ability to understand and engage in complex communication scenarios where two agents are involved with different roles. One participant assumes the role of the director and is tasked with providing instructions to another participant, the receiver, regarding actions to be performed on objects. The objects are in a vertical grid placed between the agents. However, some cells of the grid are hidden from the director’s point of view. In such a way, some of the objects are only known by the receiver, creating a belief divergence. To correctly act, the receiver thus has to take the perspective of the director to estimate his belief about the state of the grid. As depicted in Figure wp1.1, the CRAM-HRI system is integrated into the overall DACOBOT architecture as the action and communication execution planner. The CRAM-HRI system extends the existing CRAM designator class to include the interaction between multiple agents. Additionally, it defines expressive and communication process modules by introducing « TELL », « ASK » and « LISTEN » protocols.

Figure 1: An overview of the robotic architecture in which the CRAM-HRI framework has been integrated. The arrows represent the information exchanged between the components.

Multi-Agent Environment Modeling

Scene graphs enable the representation of the compositional structure of environments, where each component and its configurations are modeled as sub-graphs within the scene graph.

This allows selective consideration of only relevant parts of the graph based on the agent’s viewpoint.

A robot can start with a coarse object model, refine it with additional knowledge, and insert it at different positions in the graph as the object moves.

It further can place a virtual camera in the scene that mimics the perspective of a human.

Nonetheless, the use of detailed environment models presents challenges in data management, such as slower performance and increased memory usage.

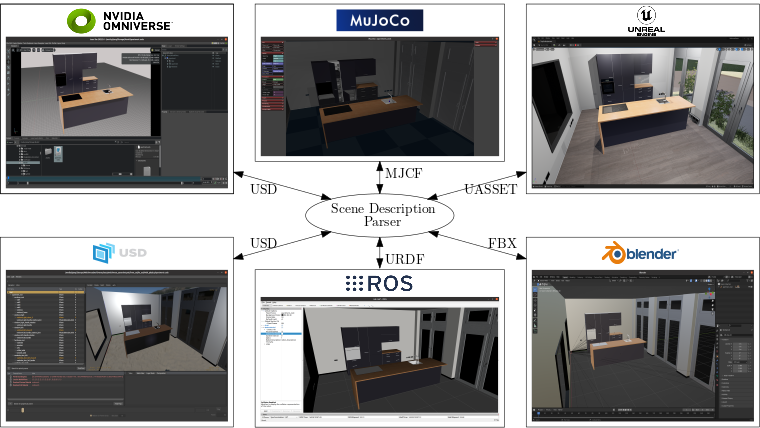

We developed an infrastructure that attempts to address these challenges which is based on the Universal Scene Description (USD) format [Nguyen2023]. USD is an open-source, industry-standard format for describing and exchanging 3D scenes and assets. It provides a low-cost way of representing, manipulating and testing environments.

However, different applications require environment information in specific formats such as URDF which is often used in ROS-based systems.

To this end, we developed the Multiverse parser that streamlines the conversion of the USD format into common formats used in different application areas including ROS-based systems, simulation and 3D modeling (Figure wp1.2).

Achievement: The Multiverse parser is introduced in a conference paper which is currently under review: Nguyen, G. H., Beßler, D., Stelter, S., Pomarlan, M., and Beetz, M. (2023). Translating universal scene descriptions into knowledge graphs for robotic environment. Under review for ICRA 2024. https://arxiv.org/abs/2310.16737

KnowRob-Me and KnowRob-You

Everyone of us has to some extend his or her own understanding of the world which is influenced by what we believe. When engaging in interactions with one another, we further have expectations about what the other person knows, believes or what the person can do well. This in turn influences our decision making in this interaction.

A robot with a similar skill would not only need to be able to imagine the physical scene from the perspective of the human, but it would further need to interpret the scene with respect to the state of mind of this person.

In our work the meaning of a scene is defined through a logical axiomatization.

We therefore extended our existing infrastructure KnowRob such that it can maintain multiple knowledge bases that encode the meaning of a scene from the perspective of different agents. Each perspective has its own axiomatization from which logical consequences can be derived by a reasoning system.

Hence, through this mechanism the robot can make a guess about what the human could have inferred from what the robot thinks that the human knows.

Interactions between perspectives are considered at the level of the querying language in KnowRob which we extended through modal operators that are indexed by an agent id.

Through these extensions, KnowRob can maintain the Know-Me and Know-You knowledge bases as separate graphs, and provides means of accessing the knowledge encoded in the different graphs through modal operators that can embed conjunctive queries in their higher order arguments.

Flexible perception framework to model belief states for HRI

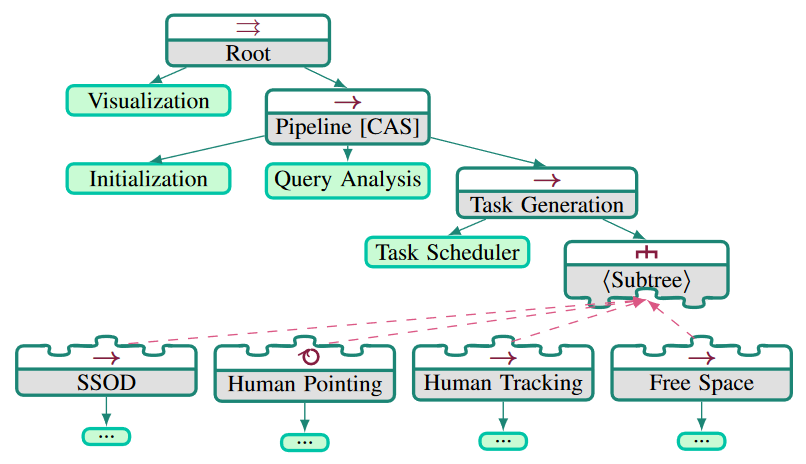

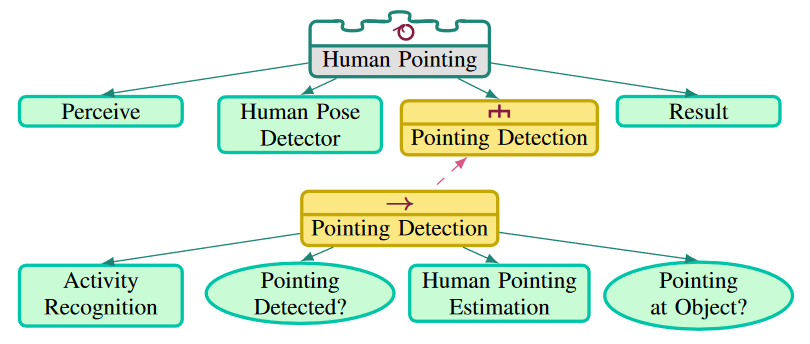

The existing perception framework, which is integrated in the perception action loop of the robot and can communicate with CRAM was extended to model belief states as task or behavior trees [mania2024]. A behavior tree is a directed rooted tree where leafs of the tree represent execution nodes, as visualized in figure wp1.3. Here, one of the first steps of the perception system is to analyze a given query to then schedule tasks. Based on the results of one leaf, like the query result or the task schedule, different subtrees can be added to the task tree if they are necessary for successful completion of a task request. In Fig. wp1.4 a Human Pointing task is depicted. It includes a perception routine, a human pose detector and a pointing detector, which again consists of execution leafs to finally form a result (if the pointing was successfully detected or not).

References

[Beetz2010] Beetz, M., Mösenlechner, L., and Tenorth, M. (2010). CRAM—A Cognitive Robot Abstract Machine for everyday manipulation in human environments. In IEEE/RSJ IROS.

[Mania2024] Mania, P., Stelter, S., Kazhoyan, G., and Beetz, M. (2024). An open and flexible robot perception framework for mobile manipulation tasks. In 2024 International Conference on Robotics and Automation (ICRA). IEEE. under review.

[Nguyen2023] Nguyen, G. H., Beßler, D., Stelter, S., Pomarlan, M., and Beetz, M. (2023). Translating universal scene descriptions into knowledge graphs for robotic environment. Under review for ICRA 2024.

[Sarthou2021] Sarthou, G., Mayima, A., Buisan, G., Belhassein, K., and Clodic, A. (2021). The Director Task: a psychology-inspired task to assess cognitive and interactive robot architectures. In IEEE RO-MAN.