Group in Charge: PI Michael Beetz group (GER), Co-PI Aurélie Clodic group (FR), Takayuki Kanda (JP)

The University of Bremen is working on the KnowRob system which is a knowledge-base framework for robots [Beetz2018]. It builds upon symbolic structures in form of Knowledge Graphs (KGs), and underpins them with explicit semantics to support reasoning processes, and to enable robots to explain their actions in terms of what they are doing, why and how.

We have developed extensions of KnowRob that are directed towards HRI scenarios.

This includes extensions of the knowledge model with HRI-related notions, the integration of multi-agent environment modeling infrastructure, and simulation-based reasoning about human behavior.

Digital Twin Knowledge Bases

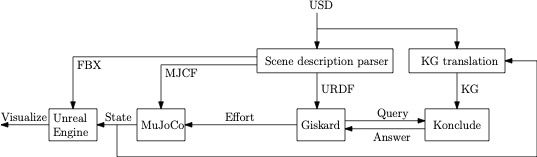

Modern scene graph frameworks such as USD are capable of handling environment models with a unprecedented level of detail from which almost photo-realistic visualizations can be generated. However, to be practically useful for a robot, environment models need to be connected to the task of the robot and its body. Such a link is crucial to determine which objects can be used to perform a task, and how they can be handled. Our approach is to represent such information as a linked KG. The KG is composed of knowledge covering robot, environment and task. In our work, we investigated whether standardized scene graphs represented in the USD format can be automatically transformed into a representation that enables robots to model their environment while also rendering the model interpretable through semantic queries.

Thus, we propose a mapping from the USD format to a KG representation which is fixed by an ontology that also supports the translation process via automated reasoning [Nguyen2023]. Definitions in the ontology are aligned with a common ontology, enabling linking to other knowledge sources. The resulting KG incorporates data mapped from the USD file, inferences made by the reasoner, and dynamical data computed by a simulator in order to build a more comprehensive relational model of the environment.

We validated the method for automated KG construction along a scenario in the household domain, and a robot task execution pipeline where the motion controller (\emph{Giskard}) is informed through inferences made by a reasoner (\emph{Konclude}) (Figure~\ref{usd-pipeline}).

The inferences are drawn based on a combination of the USD KG and additional background knowledge about objects in the scene.

Achievement: The aforementioned work is presented in a conference paper which is currently under review:

Nguyen, G. H., Beßler, D., Stelter, S., Pomarlan, M., and Beetz, M. (2023). Translating universal scene descriptions into knowledge graphs for robotic environment. ICRA 2024. https://arxiv.org/abs/2310.16737

UAivatar – A Simulation Framework for Human-Robot Interaction

In order to foster a meaningful interaction, it is recommended that both participants possess knowledge of each other. Robots can be considered relatively predictable participants in coordinated activities, but humans introduce a level of unpredictability due to their capacity for free will. This unpredictability can present challenges when integrating real-time data processing into robotic systems, as the system needs to adapt to human actions and decisions that may not always follow a predetermined pattern. In order to tackle this problem, we have introduced a simulation framework for Human-robot interaction called UAivatar where the model of human agent is tightly integrated into the AI software and control architecture of the operating robot [Abdel-Keream2022]. This framework equips robots with rich knowledge about humans, provides common ground access to valuable information about human activities, and integrates a diversely controllable and programmable human model into the inner world model of the robot allowing the robot to ’imagine’ and simulate humans performing everyday activities. The framework is not only equipped with symbolic knowledge about humans but also makes full use of a Digital Twin World that provides mechanisms for accessing the full joint configuration of our agent models which allows us to simulate HRI on a highly sophisticated level. The framework provides numerous features to assist the robot in making well-informed decisions about its actions. In addition to a symbolic knowledge base, it utilizes modern information processing technologies such as physics simulation and rendering mechanisms of game engines to generate an inner-world knowledge base. This inner world model is a detailed, and photo-realistic reconstruction of the robot’s environment. The robot can, therefore, geometrically reason about a scene by virtually looking at it using the vision capability provided by the game engine, and predict the effects of actions through semantic annotations of force dynamic events monitored in its physics simulation. Being in a virtual environment we have access to ground truth data during the whole simulation.

Achievement: The preliminary work on the simulation framework for Human-Robot Interaction is presented in the below workshop paper:

M Abdel-Keream, M Beetz. Imagine a human! UAivatar - Simulation Framework for Human-Robot Interaction. Workshop on Artificial Intelligence for Social Robots Interacting with Humans in the Real World (intellect4hri), 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), October 2022.

An Ontological Model of Human Preferences

The notion of preferences plays an important role when robots interact with humans.

These interactions can be favored by robots taking human preferences into account.

This raises the issue of how preferences should be represented to support such preference-aware decision making.

Several formal accounts for a notion of preferences exist.

However, these approaches fall short on defining the nature and structure of the options that a robot has in a given situation.

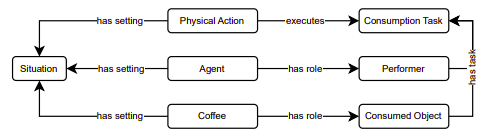

We thus investigated a model of preferences where options are non-atomic entities that are defined by the complex situations they bring about [Abdel-Keream2023-1] (Figure wp2.2).

This model serves as a structured framework for capturing and organizing user preferences.

It was validated along a set of relevant competency questions for the robot to understand and address user preferences in HRI scenarios.

The model is defined within the framework of a formal language and formulated as an extension of the existing SOMA ontology [Beßler2021].

This enables general purpose reasoner to answer the competency questions given sufficient knowledge about the situation is supplied to them.

Achievement: The preliminary work on human preferences is presented in the below workshop paper:

Abdel-Keream, M., Beßler, D., Janssen, A., Jongebloed, S., Nolte, R., Pomarlan, M., and Porzel, R. (2023). An ontological model of user preferences. In RobOntics workshop. https://ai.uni-bremen.de/papers/keream23preferences.pdf}

An Ontological Model of Human Gestures

Gesture recognition allows robots to perceive and respond to human commands and intentions, enhancing their usability and effectiveness in assisting humans.

Similarly, the ability to understand and represent gestures enables robots to behave in a way that is easily interpretable by humans.

But existing approaches in robot gesture handling do not take context into account which is crucial in many HRI scenarios.

Our proposal is to employ a formal ontology for the representation of contextual characteristics that are needed for accurate gesture interpretation.

To this end, we extended the existing SOMA ontology [Beßler2021] with a conceptualization of human gestures [Vanc2023].

The ontology characterizes gestures and scene context, and establishes explicit links between these notions in order to contextualize occurrences of gestures in form of a knowledge graph.

The knowledge graph captures the relationships between gestures, objects in the scene, and the desired actions.

This enables both to collect experiences from historical interactions, and to reason on top of the acquired knowledge.

We validated the ontology along an application scenario in the kitchen domain where a human operator commands the robot through gestures, and where a fixed set of gestures based on a semaphoric model which discretely classifies them was considered.

In an ongoing effort, we further investigate vector embeddings of context and gesture representations, and the use of a Bayesian neural network to map the constructed feature vector to the action that the human intends the robot to perform.

Achievement: The preliminary work on human gestures is presented in the below workshop paper:

Vanc, P., Stepanova, K., and Beßler, D. (2023). Ontological context for gesture interpretation. In RobOntics workshop. https://ai.uni-bremen.de/papers/vanc23gestures.pdf

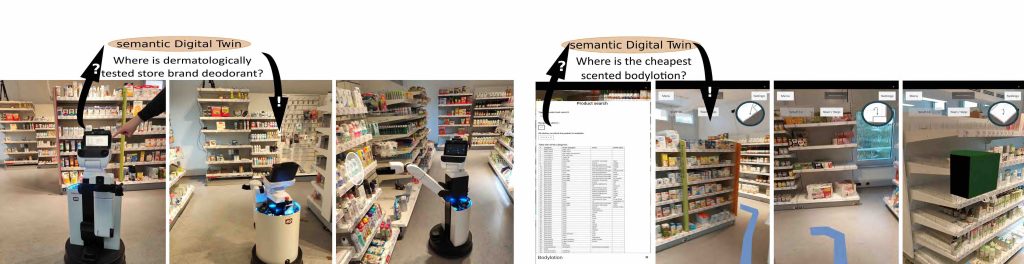

System Usability Evaluation of a Shopping Assistant

In order to model human robot interaction in a shopping scenario, we first need to design a shopping assistant framework that can be used by different agents to answer user requests. Such system was proposed in [Kümpel2023-2], further detailed and evaluated for system usability in [Kümpel2023-1]. The evaluation consisted of a shopping experiment where customers were asked to search for different articles with and without assistance by a robot or smartphone assistant as visualized in Figure wp2.3. The shopping assistant thereby accesses a digital Twin knowledge base of the store to retrieve the article position of a searched product. Both the robot and the smartphone can use the digital Twin knowledge base to reliably locate articles in a store.

The experiment results showed that there are certain cases where customers do not need or want to use a shopping assistant, which is when they know exactly what they are searching for and/ or are familiar with the store. In most other cases, the customers preferred to use a smartphone shopping assistant to find searched products.

Reasoning about perception belief states for the task to follow a human

Behavior trees [Mania2024] can be used for HRI, which we show in an example scenario that was deployed for the RoboCup@Home (Domestic Standard Platform League), where one challenge is to pick up an object to then follow a human to a destination and place the product. An example of the robot performing the experiment is depicted in Fig. wp2.4, where the robot is constantly observing the human, following it and adapting its behavior tree accordingly.

References

[Abdel-Keream2022] Abdel-Keream, M. and Beetz, M. (2022). Imagine a human! uaivatar – simulation framework for human-robot interaction. In IROS2022 Workshop: Artificial Intelligence for Social Robots Interacting with Humans in the Real World [intellect4hri].

[Abdel-Keream2023-1] Abdel-Keream, M., Beßler, D., Janssen, A., Jongebloed, S., Nolte, R., Pomarlan, M., and Porzel, R. (2023). An ontological model of user preferences. In RobOntics workshop.

[Beetz2018] Beetz, M., Beßler, D., Haidu, A., Pomarlan, M., Bozcuoglu, A. K., and Bartels, G. (2018). Knowrob 2.0 – a 2nd generation knowledge processing framework for cognition-enabled robotic agents. In International Conference on Robotics and Automation (ICRA), Brisbane, Australia.

[Beßler2021] Beßler, D., Porzel, R., Pomarlan, M., Vyas, A., Höffner, S., Beetz, M., Malaka, R., and Bateman, J. (2021). Foundations of the socio-physical model of activities (soma) for autonomous robotic agents. In Brodaric, B. and Neuhaus, F., editors, Formal Ontology in Information Systems – Proceedings of the 12th International Conference, FOIS 2021, Bozen-Bolzano, Italy, September 13-16, 2021, Frontiers in Artificial Intelligence and Applications. IOS Press. Accepted for publication.

[Kümpel2023-1] Kümpel, M., D. J. H. A. and Beetz, M. (2023). Evaluation of autonomous shopping assistants using semantic digital twin stores. In AIC’23: 9th workshop on Artificial Intelligence and Cognition. CEUR-WS.

[Kümpel2023-2] Kümpel, M., Dech, J., Hawkin, A., and Beetz, M. (2023). Robotic shopping assistance for everyone: Dynamic query generation on a semantic digital twin as a basis for autonomous shopping assistance. In Proceedings of the 22nd International Conference on Autonomous Agents and Multiagent Systems (AAMAS2023), pages 2523–2525, London, United Kingdom.

[Mania2024] Mania, P., Stelter, S., Kazhoyan, G., and Beetz, M. (2024). An open and flexible robot perception framework for mobile manipulation tasks. In 2024 International Conference on Robotics and Automation (ICRA).

[Nguyen2023] Nguyen, G. H., Beßler, D., Stelter, S., Pomarlan, M., and Beetz, M. (2023). Translating universal scene descriptions into knowledge graphs for robotic environment. ICRA 2024.

[Vanc2023] Vanc, P., Stepanova, K., and Beßler, D. (2023). Ontological context for gesture interpretation. In RobOntics workshop.